In the crazy world of web scraping, HTTP headers play a crucial yet often overlooked role. These headers are key to successful data extraction, acting as a gateway for communication between your web scraping tools and the target server.

This guide demystifies HTTP headers, explaining their importance in web scraping and how they can be effectively utilized. Whether you’re a beginner or an experienced scraper, understanding HTTP headers is essential for navigating the digital landscape efficiently and ethically.

Let’s dive into the world of HTTP headers and unlock the secrets to more effective web scraping.

What Exactly Are HTTP Headers?

HTTP Headers are an essential part of web communications, existing in both HTTP requests and responses. Think of them as a hidden, yet crucial, layer of conversation between your web browser and the server hosting the website you’re visiting. These headers carry important information that helps in processing each HTTP transaction.

When a browser sends a request to a server (like when you load a webpage), it includes HTTP Headers that carry details like the type of web browser (user agent), the types of data it can receive (accept), and the language preferred. Similarly, when a server responds, its headers might contain information about the type of content (content-type), server details, and how the data should be cached.

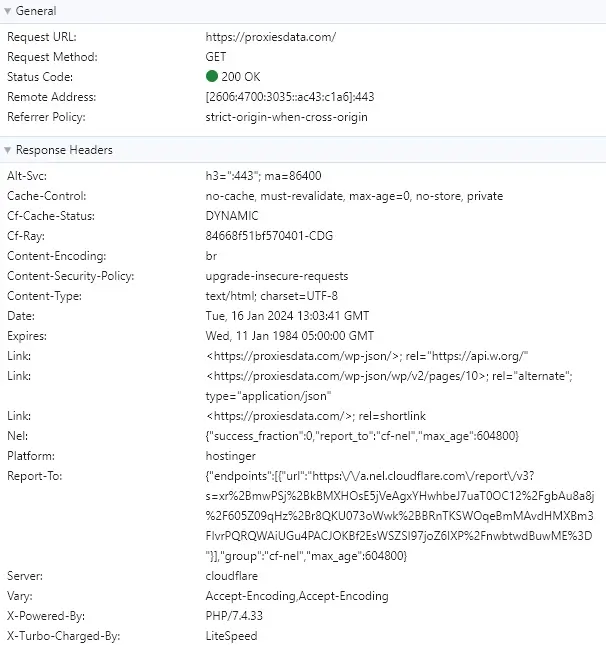

Here’s an example of how these headers look like on our website:

Types of HTTP Headers

HTTP headers are crucial in dictating how web servers and clients communicate. They fall into different categories, each serving a unique purpose in the web scraping process. Let’s explore the three main types: General, Request, and Response Headers.

General Headers

General headers are used in both request and response messages but are not directly related to the data in the body of the message. They include information about how the data should be handled, cache control, and more.

Examples of General Headers:

Cache-Control: Directs the behavior of cache mechanisms along the request-response chain. Example:Cache-Control: no-cacheensures that the stored data is not used to satisfy the request.Connection: Controls whether the network connection stays open after the current transaction. Example:Connection: keep-alivekeeps the connection open for multiple requests.

Code Example:

In Python, using requests library, you can handle general headers as follows:

import requests

url = 'https://example.com'headers = {'Cache-Control': 'no-cache','Connection': 'keep-alive'}

response = requests.get(url, headers=headers)

Request Headers

Request headers contain more information about the resource to be fetched or about the client itself. In web scraping, they are vital for simulating a real user’s browser behavior.

Significance in Web Scraping:

- User-Agent: Identifies the browser and operating system. Changing this can help mimic different devices.

- Accept-Language: Indicates the preferred language, which can affect the content returned by the server.

Common Request Headers Used in Scraping:

User-Agent: User-Agent: Mozilla/5.0 (Windows NT 10.0; Win64; x64)Accept-Language: Accept-Language: en-US,en;q=0.5

Code Example:

headers = {'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64)','Accept-Language': 'en-US,en;q=0.5'}

response = requests.get(url, headers=headers)

Response Headers

Response headers provide information about the server’s response and the returned data. They are crucial for understanding how to process the data collected during scraping.

Understanding Server Responses:

Content-Type: Indicates the media type of the returned data, e.g., HTML, JSON, XML. Essential for parsing the data correctly.Set-Cookie: Used by servers to send cookies back to the client, affecting subsequent requests.

How Response Headers Affect Data Collection:

Content-Type:Content-Type: text/html; charset=UTF-8determines how to parse the response.Set-Cookie: Used for session handling in subsequent requests.

Code Example:

response = requests.get(url)

content_type = response.headers.get('Content-Type')cookies = response.cookies

By understanding and effectively utilizing these different types of HTTP headers, you can enhance your web scraping accuracy and efficiency, making your bots appear more like human users and gaining access to the data you need.

HTTP Headers and Web Scraping

Understanding and utilizing HTTP headers is crucial in web scraping. These headers play a significant role in how web servers interpret and respond to your requests.

Why HTTP Headers Matter in Scraping

As we know already, HTTP headers are a critical component in the web scraping process, serving as the primary communication interface between the client (scraper) and the server. They play a significant role in ensuring successful data extraction by mimicking human behavior and avoiding detection. Let’s delve into why they are so important.

Mimicking Human Browsing Patterns:

One of the key strategies in successful web scraping is to make requests appear as though they are coming from a real user’s browser. Web servers scrutinize HTTP headers to assess the nature of each request. By carefully customizing these headers, scrapers can simulate the behavior of a regular internet user. This includes varying the User-Agent to represent different browsers and devices, and appropriately setting other headers like Accept-Language and Referer. This approach helps in making scraping activities blend in, reducing the likelihood of being identified and blocked as automated bots.

Avoiding Anti-Scraping Measures:

Navigating anti-scraping measures is a crucial aspect of web scraping. A primary strategy for circumventing these measures is the effective use of HTTP headers. By altering headers such as User-Agent, Referer, and Accept-Language, scrapers can mimic the browsing patterns of real users, reducing the likelihood of detection as automated bots.

However, modifying HTTP headers is only part of the solution. To further enhance the effectiveness of your scraping efforts, incorporating residential proxies is key. Some proxy services offer genuine residential IPs, which are less prone to being blocked compared to datacenter IPs or other proxy servers. This approach allows your scraping requests to appear more authentic, blending in with regular internet traffic.

Utilizing the best residential proxy services provides additional advantages, such as a diverse pool of IP addresses and geo-targeting capabilities. This is especially beneficial for accessing geo-specific content and maintaining a lower profile while scraping. In essence, combining well-managed HTTP headers with top-tier residential proxies creates a more robust and discreet web scraping strategy, significantly reducing the chances of triggering anti-scraping measures.

Customizing HTTP Headers for Scraping

Customizing HTTP headers is a fundamental technique in web scraping, enabling scrapers to access data more effectively and responsibly. Two of the most important aspects of header customization are setting user-agents and managing cookies and sessions. Let’s explore each of these in detail.

Setting User-Agents:

The User-Agent header plays a pivotal role in web scraping. It provides the server with information about the type of device and browser making the request. In the context of web scraping, altering the User-Agent header is vital for mimicking different browsing environments. This is especially important when a website presents different content or formats based on the user’s device or browser. By rotating through various User-Agent strings, scrapers can avoid detection as they don’t consistently present the same identity.

Moreover, it allows for testing how websites respond to different clients, which is essential for comprehensive data collection strategies.

Managing Cookies and Sessions:

Websites commonly use cookies to track user sessions. In web scraping, managing the Cookie headers is crucial for maintaining continuity in a session. This can be particularly important when accessing data that requires a user to be logged in or when navigating through multi-page sequences where session data is key. Proper handling of cookies ensures that each subsequent request within a session is recognized as coming from the same user. This not only aids in accessing required data but also in appearing as a returning, legitimate user rather than a new, potentially suspicious requester.

Effective cookie management helps maintain the integrity of the session and can significantly reduce the chances of being flagged as a scraper and easily bypass IP bans.

Code Example:

import requests

url = 'https://example.com'headers = {'User-Agent': 'Mozilla/5.0 (X11; Linux x86_64)','Accept-Language': 'en-US,en;q=0.9'}

session = requests.Session()response = session.get(url, headers=headers)

# Use session for subsequent requests to maintain continuityresponse = session.get('https://example.com/page2')

Common Challenges and Solutions in Web Scraping

In web scraping, certain challenges frequently arise, particularly regarding how servers respond to scraping attempts. Let’s discuss two common issues: handling redirects and dealing with rate limits.

Handling Redirects:

Redirects are a common feature of web navigation, but they can pose significant challenges in web scraping. They might redirect a scraper away from the target data or, worse, lead to an infinite loop. The key to managing redirects is handling HTTP status codes, specifically 301 (Moved Permanently) and 302 (Found, or Temporary Redirect). When a scraper encounters these status codes, it’s essential to read the Location header in the response and update the request URL to this new location. This step ensures that the scraper follows the path intended by the website, similar to how a browser would, and reaches the correct data destination. Proper handling of redirects is not just about reaching the right data; it’s also about respecting the website’s structure and navigation logic.

Dealing with Rate Limits:

Many websites implement rate limits to control the amount of data accessed within a certain time frame, a measure specifically designed to prevent or limit scraping. Rate limits are usually communicated via HTTP headers like Retry-After, which indicates how long the scraper should wait before making another request. Recognizing and respecting these headers is critical for ethical scraping practices. Ignoring rate limits can lead to the scraper being blocked or banned from the site. By adhering to these limits, scrapers demonstrate responsible behavior, ensuring they do not overload the website’s server and maintain access to the data.

Code Example for Handling Redirects and Rate Limits:

response = requests.get(url, headers=headers, allow_redirects=False)

if response.status_code in [301, 302]:new_url = response.headers['Location']response = requests.get(new_url, headers=headers)

if response.status_code == 429:retry_after = int(response.headers.get('Retry-After', 60))time.sleep(retry_after)response = requests.get(url, headers=headers)

In this section, we’ve covered why HTTP headers are a fundamental part of web scraping, how to customize them for effective scraping, and ways to navigate common challenges. These insights are key for anyone looking to conduct efficient and respectful web scraping.

Best Practices for Using HTTP Headers in Web Scraping

Utilizing HTTP headers effectively is crucial for efficient and ethical web scraping. Here’s a 101 list of best practices to always follow:

- Rotate User-Agents: Regularly change the

User-Agentstring to avoid detection by websites that block scrapers. - Respect

robots.txt: Always check and comply with the website’srobots.txtfile before scraping. - Manage Cookies: Handle cookies appropriately to maintain session integrity and avoid detection.

- Set Acceptable Referrers: Use the

Refererheader judiciously to mimic natural browsing behavior. - Handle Redirects Properly: Pay attention to 301 and 302 status codes and follow the redirects correctly.

- Adhere to Rate Limits: Respect

Retry-Afterheaders and other indicators of rate limiting to prevent being blocked. - Use Custom Headers Sparingly: Avoid overuse of custom headers as they can make your requests stand out.

- Mimic Browser Requests: Ensure your headers closely resemble those of a typical browser request.

- Keep Headers Consistent: Avoid drastically changing headers between requests in the same session.

- Handle Compression: Use headers like

Accept-Encodingto handle compressed data efficiently. - Secure Your Requests: Utilize headers like

Upgrade-Insecure-Requeststo promote secure data transactions. - Prioritize Content Types: Use the

Acceptheader to specify the type of content you prefer to receive. - Monitor Server Responses: Regularly check response headers for clues about how your requests are being treated.

- Stay Updated: Keep abreast of changes in how browsers and servers handle headers.

- Be Ethical: Always scrape responsibly and consider the impact of your actions on the target server and its resources.

By following these best practices, you can enhance the effectiveness of your web scraping efforts while maintaining respectful and responsible interactions with web servers.

Conclusion

In this comprehensive guide provided by Proxies Data, we’ve explored the intricate world of HTTP headers and their pivotal role in the art of web scraping. From understanding the different types of headers to mastering their customization, and overcoming common scraping challenges, we’ve covered the essential strategies for effective data extraction.

Remember, the key to successful web scraping lies in the subtle balance between technical savvy and ethical practices.

Frequently Asked Questions

HTTP headers are part of HTTP requests and responses, providing essential information about the client’s browser, the requested page, the server, and more.

Yes, headers like User-Agent can influence the content and format of the web pages served, as some websites deliver different data based on the client’s system and preferences.

Modifying headers is often done for testing purposes, to enhance privacy, or to troubleshoot issues related to web browsing and server interactions.

The User-Agent header identifies the browser and operating system to the server, which can then deliver content suitable for that specific environment.

Yes, certain headers play a crucial role in web security by controlling resources’ caching, defining content types, and managing cross-site scripting policies.

Daniel is an SEM Specialist with many years of experience and he has a lot of experience with proxies and web data collection.