Welcome to this beginner-friendly guide on how to scrape Zillow data using Python! By following this tutorial, you’ll learn the basics of web scraping and will be able to extract property data from Zillow in no time. Don’t worry if you’re new to this, we’ve got you covered with clear explanations and simple code examples. So, let’s dive in!

What You’ll Need

To get started, you’ll need the following:

- Python installed on your computer.

- A code editor (e.g., Visual Studio Code).

- A stable internet connection.

- A little patience and enthusiasm!

Setting Up Your Environment

To ensure a smooth web scraping experience, let’s set up your Python environment using Visual Studio Code (VSCode), a popular code editor. This section will guide you through installing the required Python libraries and configuring your VSCode workspace.

Step 1: Install Visual Studio Code

If you haven’t already, download and install Visual Studio Code from the official website: https://code.visualstudio.com/ץ Download the default version that fits your OS.

Step 2: Install the Python Extension

To work with Python in VSCode, you’ll need to install the official Python extension. Follow these steps:

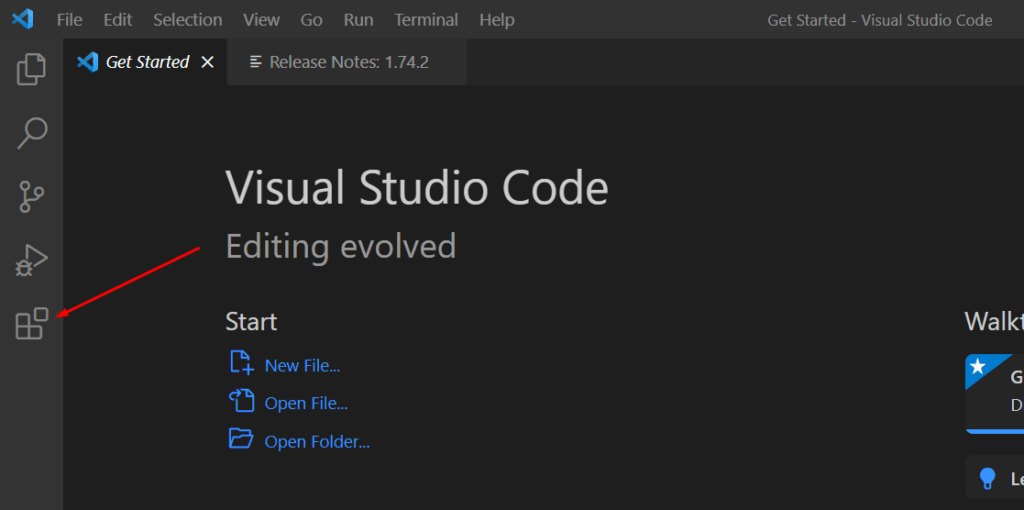

- Open Visual Studio Code.

- Click on the Extensions icon in the Activity Bar on the side of the window.

- Search for “Python” in the Extensions search bar.

- Find the official Python extension by Microsoft, and click the “Install” button.

Step 3: Create a New Project Folder

Create a new folder for your Zillow scraper project. You can do this directly in VSCode:

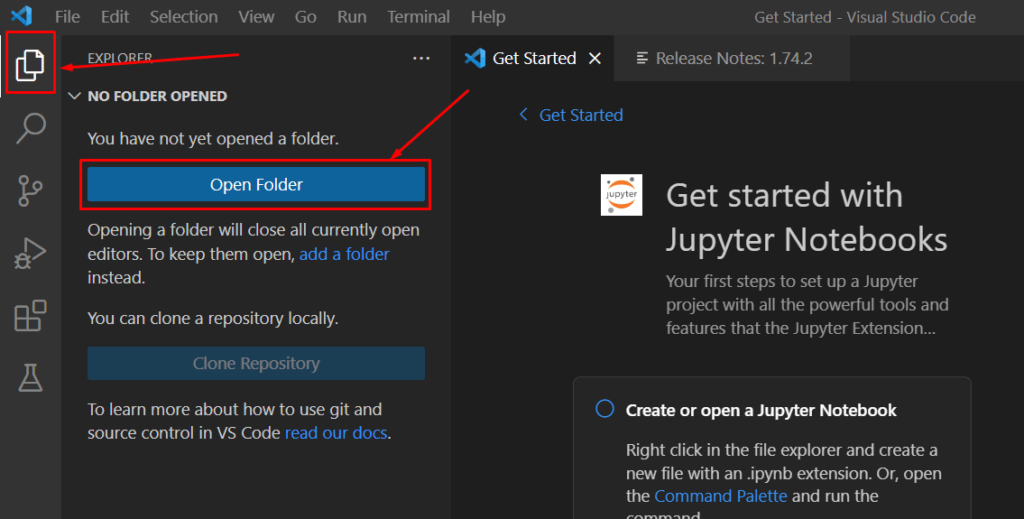

- Click on the Explorer icon in the Activity Bar.

- Click on “Open Folder” and create a new folder for your project.

- Click “Select Folder” to open the folder in VSCode.

Step 4: Create a Python File

In your project folder, create a new Python file:

- Right-click on the empty space in the Explorer.

- Click on “New File” and name it “

zillow_scraper.py“.

Step 5: Install the Required Libraries

You’ll need to install a couple of libraries to make the scraping process easier:

- Requests: A library for making HTTP requests.

- BeautifulSoup: A library for parsing HTML and XML documents.

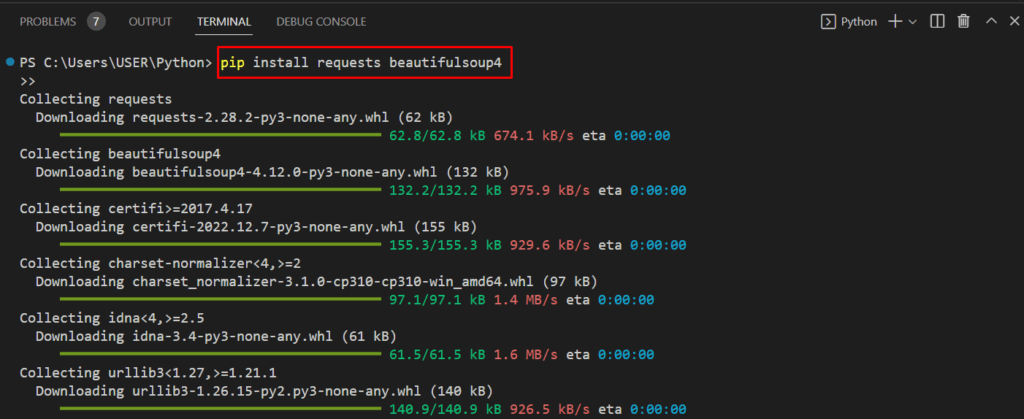

To install these libraries, open the integrated terminal in VSCode:

- Click on “Terminal” in the top menu.

- Select “New Terminal”.

Now, type the following command in the terminal:

pip install requests beautifulsoup4

You’re all set! Your Python environment is ready for web scraping in Visual Studio Code. You can now proceed with writing the code to scrape Zillow property listings as explained in the previous sections of this guide.

The Basics of Web Scraping

Web scraping is the process of extracting data from websites. In our case, we’ll be extracting property data from Zillow. To do this, we’ll send an HTTP request to a Zillow URL, receive the HTML response, and parse the data we need using BeautifulSoup.

Step 1: Send an HTTP Request

Using the requests library, you can send an HTTP request to Zillow’s URL. Here’s an example:

import requests

url = "https://www.zillow.com/homes/for_sale/"

response = requests.get(url)

print(response.content)

This code will print the HTML content of the Zillow page. If you see a block of HTML text, you’re on the right track! Note that you’ll only get the static content and that Zillow can block your request.

Step 2: Parse the HTML Response

Now, let’s use BeautifulSoup to parse the HTML content and extract the data we need. For this guide, we’ll focus on extracting property details such as the address, price, and number of bedrooms.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.content, "html.parser")

property_listings = soup.find_all("div", class_="list-card-info")

for listing in property_listings:

address = listing.find("address", class_="list-card-addr").text

price = listing.find("div", class_="list-card-price").text

details = listing.find("ul", class_="list-card-details").text

print(f"Address: {address}")

print(f"Price: {price}")

print(f"Details: {details}")

print("\n")

This code will output the address, price, and details for each property listing on the Zillow page. Please note that classes of elements may change so you’ll have to adjust those in the code. This is also one of the main reasons why manual web scraping can be difficult.

Handling Proxies and Throttling

Web scraping can be resource-intensive, and some websites may limit or block your requests. To overcome this, you can use proxies to change your IP address after a certain number of requests. The best residential proxy providers offer a range of options, including SOCKS5 proxies, which are also good for web scraping.

Additionally, consider implementing delays between requests to avoid overwhelming the target website. This can be done using Python’s time.sleep() function.

Conclusion

Congratulations! You’ve learned how to scrape Zillow and just built a simple Zillow scraper using Python. Now you can expand on this knowledge to create even more powerful Zillow scrapers. Of course, the code presented here doesn’t help with dynamic content and can encounter CAPTCHAs and errors.

If you’d like to save time and effort, there are many high-quality automatic tools that can serve as the best Zillow scrapers. These great web scraping tools often come with advanced features and support, making it easier to gather the data you need without building your own scraper from scratch. Some of these tools also come with built-in proxy management and advanced data extraction capabilities, further simplifying the process.

So, whether you choose to continue developing your own Zillow scraper or explore the world of pre-built Zillow scraping tools, you’re now ready to tackle the challenges of web scraping.

Frequently Asked Questions

It can be difficult if you don’t have any programming experience. Also, you may encounter some challenges like blockages.

No, this is a simple tutorial on the basics of scraping Zillow.

Only you can answer that question. If you feel that you are not ready yet to create a scraper of your own, then the answer would be yes.

Daniel is an SEM Specialist with many years of experience and he has a lot of experience with proxies and web data collection.